Geometry-aware 4D Video Generation

for Robot Manipulation

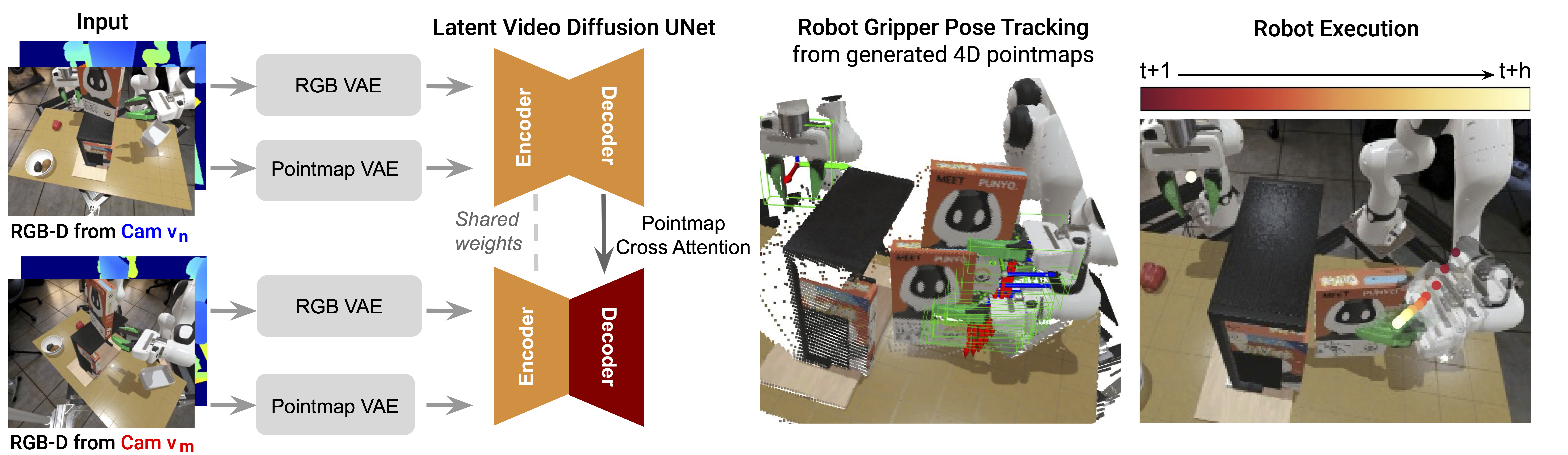

Method

Our model takes RGB-D inputs from two camera viewpoints and predicts future pointmaps alongside RGB images. To enforce geometric consistency across views, we introduce cross-attention layers in the U-Net decoders for pointmap prediction. The resulting 4D video can then be used with off-the-shelf RGB-D pose tracking methods to recover the 6-DoF pose of the robot end-effector, enabling direct application to downstream manipulation tasks.

(a) StoreCerealBoxUnderShelf 🥣

In this task, a single robot arm picks up a cereal box from the top of a shelf and inserts it into the shelf below. Occlusions occur during insertion, especially from certain camera viewpoints, making multi-view predictions essential. Additionally, the pick-and-insert action requires spatial understanding and precision.

Baselines:

Policy Rollout (unseen views):

(b) PutSpatulaOnTable 👩🍳

A single robot arm retrieves a spatula from a utensil crock and places it on the left side of the table. This task requires precise manipulation to successfully grasp the narrow object.

Baselines:

Policy Rollout (unseen views):

(c) PlaceAppleFromBowlIntoBin 🍎

One robot arm picks up an apple from a bowl on the left side of the table and places it on a shelf; a second arm then picks up the apple and deposits it into a bin on the right side. This is a long-horizon, bimanual task that tests the model's ability to predict both temporally and spatially consistent trajectories.

Baselines:

Policy Rollout (unseen views):

(d) Real World Results 🌍

By finetuning a policy trained in simulation, our model transfers to real world RGB-D videos for several manipulation tasks.